Semantic search

Gotta love it

Don't do research if you don't really love it. Financially, it's desastrous. It's the "worst pay for the investment", according to CNN.

Good thing I love it. And good thing Google loves the Semantic Web as well. Or why else do they make my desktop more and more semantic? I just installed the Desktop2 Beta - and it is pretty cool. And it's wide open to Semantic Stuff.

Gödel and Leibniz

Gödel in his later age became obsessed with the idea that Leibniz had written a much more detailed version of the Characteristica Universalis, and that this version was intentionally censored and hidden by a conspiracy. Leibniz had discovered what he had hunted for his whole life, a way to calculate truth and end all disagreements.

I'm surprised that it was Gödel in particular to obsess with this idea, because I'd think that someone with Leibniz' smarts would have benefitted tremendously from Gödel's proofs, and it might have been a helpful antidote to his own obsession with making truth a question of mathematics.

And wouldn't it seem likely to Gödel that even if there were such a Characteristics Universalis by Leibniz, that, if no one else before him, he, Gödel himself would have been the one to find the fatal bug in it?

Gödel and physics

"A logical paradox at the heart of mathematics and computer science turns out to have implications for the real world, making a basic question about matter fundamentally unanswerable."

I just love this sentence, published in "Nature". It raises (and somehow exposes the author's intuition about) one of the deepest questions in science: how are mathematics, logic, computer science, i.e. the formal sciences, on the one side, and the "real world" on the other side, related? What is the connection between math and reality? The author seems genuinely surprised that logic has "implications for the real world" (never mind that "implication" is a logical term), and seems to struggle with the idea that a counter-intuitive theorem by Gödel, which has been studied and scrutinized for 85 years, would also apply to equations in physics.

Unfortunately the fundamental question does not really get tackled: the work described here, as fascinating as it is, was an intentional, many year effort to find a place in the mathematical models used in physics where Gödel can be applied. They are not really discussing the relation between maths and reality, but between pure mathematics and mathematics applied in physics. The original deep question remains unsolved and will befuddle students of math and the natural sciences for the next coming years, and probably decades (besides Stephen Wolfram, who beieves to have it all solved in NKS, but that's another story).

Nature: Paradox at the heart of mathematics makes physics problem unanswerable

Phys.org: Quantum physics problem proved unsolvable: Godel and Turing enter quantum physics

Gödel on language

- "The more I think about language, the more it amazes me that people ever understand each other at all." - Kurt Gödel

Gödel's naturalization interview

When Gödel went to his naturalization interview, his good friend Einstein accompanied him as a witness. On the way, Gödel told Einstein about a gap in the US constitution that would allow the country to be turned into a dictatorship. Einstein told him to not mention it during the interview.

The judge they came to was the same judge who already naturalized Einstein. The interview went well until the judge asked whether Gödel thinks that the US could face the same fate and slip into a dictatorship, as Germany and Austria did. Einstein became alarmed, but Gödel started discussing the issue. The judge noticed, changed the topic quickly, and the process came to the desired outcome.

I wonder what that was, that Gödel found, but that's lost to history.

Happy New Year 2021!

2020 was a challenging year, particularly due to the pandemic. Some things were very different, some things were dangerous, and the pandemic exposed the fault lines in many societies in a most tragic way around the world.

Let's hope that 2021 will be better in that respect, that we will have learned from how the events unfolded.

But I'm also amazed by how fast the vaccine was developed and made available to tens of millions.

I think there's some chance that the summer of '21 will become one to sing about for a generation.

Happy New Year 2021!

Happy New Year, 2023!

For starting 2023, I will join the Bring Back Blogging challenge. The goal is to write three posts in January 2023.

Since I have been blogging on and off the last few years anyway, that shouldn't be too hard.

Another thing this year should bring is to launch Wikifunctions, the project I have been working on since 2020. It was a longer ride than initially hoped for, but here we are, closer to launch than ever. The Beta is available online, and even though not everything works yet, I was already able to impress my kid with the function to reverse a text.

Looking forward to this New Year 2023, a number that to me still sounds like it is from a science fiction novel.

History of knowledge graphs

An overview on the history of ideas leading to knowledge graphs, with plenty of references. Useful for anyone who wants to understand the background of the field, and probably the best current such overview.

Hot Skull

I watched Hot Skull on Netflix, a Turkish Science Fiction dystopic series. I knew there was only one season, and no further seasons were planned, so I was expecting that the story would be resolved - but alas, I was wrong. And the book the show is based on is only available in Turkish, so I wouldn't know of a way to figure out how the story end.

The premise is that there is a "semantic virus", a disease that makes people 'jabber', to talk without meaning (but syntactically correct), and to be unable to convey or process any meaning anymore (not through words, and very limited through acts). They seem also to loose the ability to participate in most parts of society, but they still take care of eating, notice wounds or if their loved ones are in distress, etc. Jabbering is contagious, if you hear someone jabber, you start jabbering as well, jabberers cannot stop talking, and it quickly became a global pandemic. So they are somehow zombieish, but not entirely, raising questions about them still being human, their rights, etc. The hero of the story is a linguist.

Unfortunately, the story revolves around the (global? national?) institution that tries to bring the pandemic under control, and which has taken over a lot of power (which echoes some of the conspiracy theories of the COVID pandemic), and the fact that this institution is not interested in finding a cure (because going back to the former world would require them to give back the power they gained). The world has slid into economic chaos, e.g. getting chocolate becomes really hard, there seems to be only little international cooperation and transportation going on, but there seems to be enough food (at least in Istanbul, where the story is located). Information about what happened in the rest of the world is rare, but everyone seems affected.

I really enjoyed the very few and rare moments where they explored the semantic virus and what it does to people. Some of them are heart-wrenching, some of them are interesting, and in the end we get indications that there is a yet unknown mystery surrounding the disease. I hope the book at least resolves that, as we will probably never learn how the Netflix show was meant to end. The dystopic parts about a failing society, the whole plot about an "organization taking over the world and secretly fighting a cure", and the resistance to that organization, is tired, not particularly well told, standard dystopic fare.

The story is told very slowly and meanders leisurely. I really like the 'turkishness' shining through in the production: Turkish names, characters eating simit, drinking raki, Istanbul as a (underutilized) background, the respect for elders, this is all very well meshed into the sci-fi story.

No clear recommendation to watch, mostly because the story is unfinished, and there is simply not enough payoff for the lengthy and slow eight episodes. I was curious about the premise, and still would like to know how the story ends, what the authors intended, but it is frustrating that I might never learn.

How much April 1st?

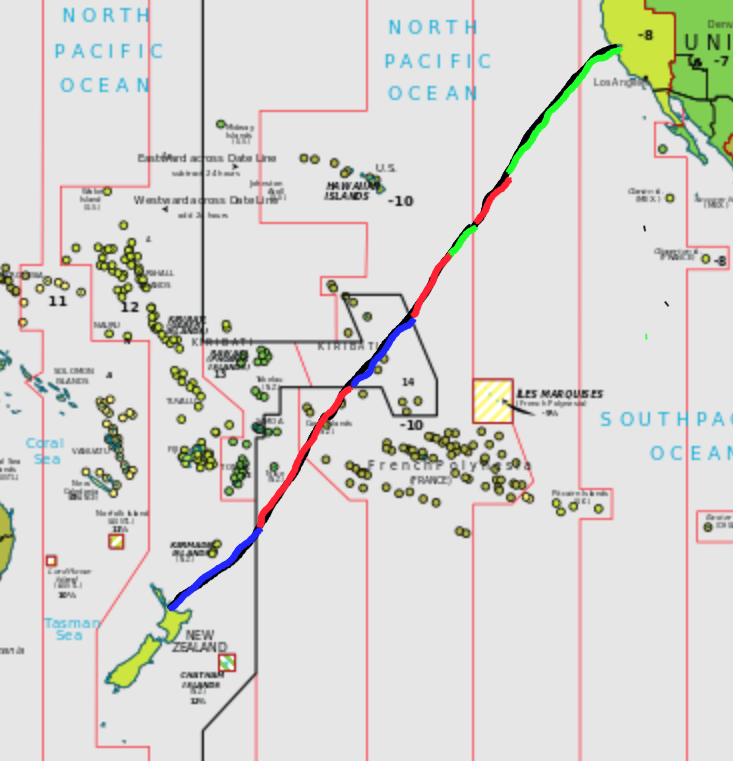

In my previous post, I was stating that I might miss April 1st entirely this year, and not as a joke, but quite literally. Here I am chronicling how that worked out. We were flying flight NZ7 from San Francisco to Auckland, starting on March 31st and landing on April 2nd, and here we look into far too much detail to see how much time the plane spent in April 1st during that 12 hours 46 minutes flight. There’s a map below to roughly follow the trip.

5:45 UTC / 22:45 31/3 local time / 37.62° N, 122.38° W / PDT / UTC-7

The flight started with taxiing for more than half an hour. We left the gate at 22:14 PDT time (doesn’t bode well), and liftoff was at 22:45 PDT.. So we had only about an hour of March left at local time. We were soon over the Pacific Ocean, as we would stay for basically the whole flight. Our starting point still had 1 hour 15 minutes left of March 31st, whereas our destination at this time was at 18:45 NZDT on April 1st, so still had 5 hours 15 minutes to go until April 2nd. Amusingly this would also be the night New Zealand switches from daylight saving time (NZDT) to standard time (NZST). Not the other way around, because the seasons are opposite in the southern hemisphere.

6:00 UTC / 23:00 31/3 local time / 37° N, 124° W / PDT / UTC-7

We are still well in the PDT / UTC-7 time zone, which, in general, goes to 127.5° W, so the local time is 23:00 PDT. We keep flying southwest.

6:27 UTC / 22:27 31/3 local time? / 34.7° N, 127.5° W / AKDT? / UTC-8?

About half an hour later, we reach the time zone border, moving out of PDT to AKDT, Alaska Daylight Time, but since Alaska is far away it is unclear whether daylight saving applies here. Also, at this point we are 200 miles (320 km) out on the water, and thus well out of the territorial waters of the US, which go for 12 nautical miles (that is, 14 miles or 22 km), so maybe the daylight saving time in Alaska does not apply and we are in international waters? One way or the other, we moved back in local time: it is suddenly either 22:27pm AKDT or even 21:27 UTC-9, depending on whether daylight saving time applies or not. For now, April 1 was pushed further back.

7:00 UTC / 23:00 31/3 local time? / 31.8° N, 131.3 W / AKDT? / UTC-8?

Half an hour later and midnight has reached San Francisco, and April 1st has started there. We were more than 600 miles or 1000 kilometers away from San Francisco, and in local time either at 23:00 AKDT or 22:00 UTC-9. We are still in March, and from here all the way to the Equator and then some, UTC-9 stretched to 142.5° W. We are continuing southwest.

8:00 UTC / 23:00 31/3 local time / 25.2° N, 136.8° W / GAMT / UTC-9

We are halfway between Hawaii and California. If we are indeed in AKDT, it would be midnight - but given that we are so far south, far closer to Hawaii, which does not have daylight saving time, and deep in international waters anyway, it is quite safe to assume that we really are in UTC-9. So local time is 23:00 UTC-9.

9:00 UTC / 0:00 4/1 local time / 17.7° N, 140.9° W / GAMT / UTC-9

There is no denying it, we are still more than a degree away from the safety of UTC-10, the Hawaiian time zone. It is midnight in our local time zone. We are in April 1st. Our plan has failed. But how long would we stay here?

9:32 UTC / 23:32 31/3 local time / 13.8° N, 142.5° W / HST / UTC-10

We have been in April 1st for 32 minutes. Now we cross from UTC-9 to UTC-10. We jump back from April to March, and it is now 23:32 local time. The 45 minutes of delayed take-off would have easily covered for this half hour of April 1st so far. The next goal is to move from UTC-10, but the border of UTC-10 is a bit irregular between Hawaii, Kiribati, and French Polynesia, looking like a hammerhead. In 1994, Kiribati pushed the Line Islands a day forward, in order to be able to claim to be the first ones into the new millennium.

10:00 UTC / 0:00 4/1 local time / 10° N, 144° W / HST / UTC-10

We are pretty deep in HST / UTC-10. It is again midnight local time, and again April 1st starts. How long will we stay there now? For the next two hours, the world will be in three different dates: in UTC-11, for example American Samoa, it is still March 31st. Here in UTC-10 it is April 1st, as it is in most of the world, from New Zealand to California, from Japan to Chile. But in UTC+14, on the Line Islands, 900 miles southwest, it is already April 2nd.

11:00 UTC / 1:00 4/1 local time / 3° N, 148° W / HST / UTC-10

We are somewhere east of the Line Islands. It is now midnight in New Zealand and April 1st has ended there. Even without the delayed start, we would now be solidly in April 1st local time.

11:24 UTC / 1:24 4/1 local time / 0° N, 150° W / HST / UTC-10

We just crossed the equator.

12:00 UTC / 2:00 4/2 local time / 3.7° S, 152.3° W / LINT / UTC+14

The international date line in this region does not go directly north-south, but goes one an angle, so without further calculation it is difficult to exactly say when we crossed the international date line, but it would be very close to this time. So we just went from 2am local time in HST / UTC-10 on April 1st to 2am local time in LINT / UTC+14 on April 2nd! This time, we have been in April 1st for a full two hours.

(Not for the first time, I wish Wikifunctions would already exist. I am pretty sure that taking a geocoordinate and returning the respective timezone will be a function that will be available there. There are a number of APIs out there, but none of which seem to provide a Web interface, and they all seem to require a key.)

12:44 UTC / 2:44 4/1 local time / 8° S, 156° W / HST / UTC-10

We just crossed the international date line again! Back from Line Island Time we move to French Polynesia, back from UTC+14 to UTC-10 again - which means it switches from 2:44 on April 2nd back to 2:44 on April 1st! For the third time, we go to April 1st - but for the first time we don’t enter it from March 31st, but from April 2nd! We just traveled back in time by a full day.

13:00 UTC / 3:00 4/1 local time / 9.6° S, 157.5° W / HST / UTC-10

We are passing between the Cook Islands and French Polynesia. In New Zealand, daylight saving time ends, and it switches from 3:00 local time in NZDT / UTC+13 to 2:00 local time in NZST / UTC+12. While we keep flying through the time zones, New Zealand declares itself to a different time zone.

14:00 UTC / 4:00 4/1 local time / 15.6° S, 164.5° W / HST / UTC-10

We are now “close” to the Cook Islands, which are associated with New Zealand. Unlike New Zealand, the Cook Islands do not observe daylight saving time, so at least one thing we don’t have to worry about. I find it surprising that the Cook Islands are not in UTC+14 but in UTC-10, considering they are in association with New Zealand. On the other side, making that flip would mean they would literally lose a day. Hmm. That could be one way to avoid an April 1st!

14:27 UTC / 3:27 4/1 local time / 18° S, 167° W / SST / UTC-11

We move from UTC-10 to UTC-11, from 4:27 back to 3:27am, from Cook Island Time to Samoa Standard Time. Which, by the way, is not the time zone in the independent state of Samoa, as they switched to UTC+13 in 2011. Also, all the maps on the UTC articles in Wikipedia (e.g. UTC-12) are out of date, because their maps are from 2008, not reflecting the change of Samoa.

15:00 UTC / 4:00 4/1 local time / 21.3° S, 170.3° W / SST / UTC-11

We are south of Niue and east of Tonga, still east of the international date line, in UTC-11. It is 4am local time (again, just as it was an hour ago). We will not make it to UTC-12, because there is no UTC-12 on these latitudes. The interesting thing about UTC-12 is that, even though no one lives in it, it is relevant for academics all around the world as it is the latest time zone, also called Anywhere-on-Earth, and thus relevant for paper submission deadlines.

15:23 UTC / 3:23 4/2 local time / 23.5° S, 172.5° W / NZST / UTC+12

We crossed the international date line again, for the third and final time for this trip! Which means we move from 4:23 am on April 1st local time in Samoa Standard Time to 3:23 am on April 2nd local time in NZST (New Zealand Standard Time). We have now reached our destination time zone.

16:34 UTC / 4:34 4/2 local time / 30° S, 180° W / NSZT / UTC+12

We just crossed from the Western into the Eastern Hemisphere. We are about halfway between New Zealand and Fiji.

17:54 UTC / 5:52 4/2 local time / 37° S, 174.8°W / NZST / UTC+12

We arrived in Auckland. It is 5:54 in the morning, on April 2nd. Back in San Francisco, it is 10:54 in the morning, on April 1st.

Green is March 31st, Red April 1st, Blue April 2nd, local times during the flight.

Basemap https://commons.wikimedia.org/wiki/File:Standard_time_zones_of_the_world_%282012%29_-_Pacific_Centered.svg CC-BY-SA by TimeZonesBoy, based on PD by CIA World Fact Book

Postscript

Altogether, there was not one April 1st, but three stretches of April 1st: first, for 32 minutes before returning to March 31st, then for 2 hours again, then we switched to April 2nd for 44 minutes and returned to April 1st for a final 2 hours and 39 minutes. If I understand it correctly, and I might well not, as thinking about this causes a knot in my brain, the first stretch would have been avoidable with a timely start, the second could have been much shorter, but the third one would only be avoidable with a different and longer flight route, in order to stay West of the international time line, going south around Samoa.

In total, we spent 5 hours and 11 minutes in April 1st, in three separate stretches. Unless Alaskan daylight saving counts in the Northern Pacific, in which case it would be an hour more.

So, I might not have skipped April 1st entirely this year, but me and the other folks on the plane might well have had the shortest April 1st of anyone on the planet this year.

I totally geeked out on this essay. If you find errors, I would really appreciate corrections. Either in Mastodon, mas.to/@vrandecic, or on Twitter, @vrandecic. Email is the last resort, vrandecic@gmail.com (The map though is just a quick sketch)

One thing I was reminded of is, as Douglas Adams correctly stated, that writing about time travel really messes up your grammar.

The source for the flight data is here:

- https://flightaware.com/live/flight/ANZ7/history/20230401/0510Z/KSFO/NZAA

- https://flightaware.com/live/flight/ANZ7/history/20230401/0510Z/KSFO/NZAA/tracklog

How much information is in a language?

About the paper "Humans store about 1.5 megabytes of information during language acquisition“, by Francis Mollica and Steven T. Piantadosi.

This is one of those papers that I both love - I find the idea is really worthy of investigation, having an answer to this question would be useful, and the paper is very readable - and can't stand, because the assumptions in the papers are so unconvincing.

The claim is that a natural language can be encoded in ~1.5MB - a little bit more than a floppy disk. And the largest part of this is the lexical semantics (in fact, without the lexical semantics, the rest is less than 62kb, far less than a short novel or book).

They introduce two methods about estimating how many bytes we need to encode the lexical semantics:

Method 1: let's assume 40,000 words in a language (languages have more words, but the assumptions in the paper is about how many words one learns before turning 18, and for that 40,000 is probably an Ok estimation although likely on the lower end). If there are 40,000 words, there must be 40,000 meanings in our heads, and lexical semantics is the mapping of words to meanings, and there are only so many possible mappings, and choosing one of those mappings requires 553,809 bits. That's their lower estimate.

Wow. I don't even know where to begin in commenting on this. The assumption that all the meanings of words just float in our head until they are anchored by actual word forms is so naiv, it's almost cute. Yes, that is likely true for some words. Mother, Father, in the naive sense of a child. Red. Blue. Water. Hot. Sweet. But for a large number of word meanings I think it is safe to assume that without a language those word meanings wouldn't exist. We need language to construct these meanings in the first place, and then to fill them with life. You can't simply attach a word form to that meaning, as the meaning doesn't exist yet, breaking down the assumptions of this first method.

Method 2: let's assume all possible meanings occupy a vector space. Now the question becomes: how big is that vector space, how do we address a single point in that vector space? And then the number of addresses multiplied with how many bits you need for a single address results in how many bits you need to understand the semantics of a whole language. There lower bound is that there are 300 dimensions, the upper bound is 500 dimensions. Their lower bound is that you either have a dimension or not, i.e. that only a single bit per dimension is needed, their upper bound is that you need 2 bits per dimension, so you can grade each dimension a little. I have read quite a few papers with this approach to lexical semantics. For example it defines "girl" as +female, -adult, "boy" as -female,-adult, "bachelor" as +adult,-married, etc.

So they get to 40,000 words x 300 dimensions x 1 bit = 12,000,000 bits, or 1.5MB, as the lower bound of Method 2 (which they then take as the best estimate because it is between the estimate of Method 1 and the upper bound of Method 2), or 40,0000 words x 500 dimensions x 2 bits = 40,000,000 bits, or 8MB.

Again, wow. Never mind that there is no place to store the dimensions - what are they, what do they mean? - probably the assumption is that they are, like the meanings in Method 1, stored prelinguistically in our brains and just need to be linked in as dimensions. But also the idea that all meanings expressible in language can fit in this simple vector space. I find that theory surprising.

Again, this reads like a rant, but really, I thoroughly enjoyed this paper, even if I entirely disagree with it. I hope it will inspire other papers with alternative approaches towards estimating these numbers, and I'm very much looking forward to reading them.

- Humans store about 1.5MB of information during language acquisition, Royal Society Open Science

I am weak

Basically I was working today, instead of doing some stuff I should have finished a week ago for some private activities.

The challenge I posed myself: how semantic can I already get? What tools can I already use? Firefox has some pretty neat extensions, like FOAFer, or the del.icio.us plugin. I'll see if I can work with them, if there's a real payoff. The coolest, somehow semantic plugin I installed is the SearchStatus. It shows me the PageRank and the Alexa rating of the visited site. I think that's really great. It gives me just the first glimpse of what metadata can do in helping being an informed user. The Link Toolbar should be absolutely necessary, but pitily it isn't, as not enough people make us of HTMLs link element the way it is supposed to be used.

Totally unsemantic is the mouse gestures plugin. Nevertheless, I loved those with Opera, and I'm happy to have them back.

Still, there are such neat things like a RDF editor and query engine. Installed it and now I want to see how to work with it... but actually I should go upstairs, clean my room, organise my bills and insurance and doing all this real life stuff...

What's the short message? Get Firefox today and discover its extensions!

I'm a believer

The Semantic Web is promising quite a lot. Just take a look at the most cited description of the vision of the Semantic Web, written by Tim Berners-Lee and others. Many people are researching on the various aspects of the SemWeb, but in personal discussions I often sense a lack of believing.

I believe in it. I believe it will change the world. It will be a huge step forward to the data integration problem. It will allow many people to have more time to spend on the things they really love to do. It will help people organize their lives. It will make computers seem more intelligent and helpful. It will make the world a better place to live in.

This doesn't mean it will safe the world. It will offer only "nice to have"-features, but then, so many of them you will hardly be able to think of another world. I hardly remember the world how it was before e-Mail came along (I'm not that old yet, mind you). I sometimes can't remember how we went out in the evening without a mobile. That's where I see the SemWeb in 10 years: no one will think it's essential, but you will be amazed when thinking back how you lived without it.

ISWC 2008 coming to Karlsruhe

Yeah! ISWC2006 is just starting, and I am really looking forward to it. The schedule looks more than promising, and Semantic MediaWiki is among the finalists for the Semantic Web Challenge! I will write more about this year's ISWC the next few days.

But, now the news: yesterday it was decided that ISWC2008 will be hosted by the AIFB in Karlsruhe! It's a pleasure and a honor -- and I am certainly looking forward to it. Yeah!

ISWC impressions

The ISWC 2005 is over, but I'm still in Galway, hanging around at the OWL Experiences and Direction Workshop. The ISWC was a great conference, really! Met so many people from the Summer School again, heard a surprisingly number of interesting talks (there are some conferences, where one boring talk follows the other, that's definitively different here) and got some great feedback on some work we're doing here in Karlsruhe.

Boris Motik won the Best Paper Award of the ISWC, for his work on the properties of meta-modeling. Great paper and great work! Congratulations to him, and also to Peter Mika, though I have still to read his paper to form my own opinion.

I will follow up on some of the topics from the ISWC and the OWLED workshop, but here's my quick, first wrap-up: great conference! Only the weather was pitily as bad as expected. Who decided on Ireland in November?

If life was one day

If the evolution of animals was one day... (600 million years)

- From 1am to 4am, most of the modern types of animals have evolved (Cambrian explosion)

- Animals get on land a bit at 3am. Early risers! It takes them until 7am to actually breath air.

- Around noon, first octopuses show up.

- Dinosaurs arrive at 3pm, and stick around until quarter to ten.

- Humans and chimpanzees split off about fifteen minutes ago, modern humans and Neanderthals lived in the last minute, and the pyramids were built around 23:59:59.2.

In that world, if that was a Sunday:

- Saturday would have started with the introduction of sexual reproduction

- Friday would have started by introducing the nucleus to the cell

- Thursday recovering from Wednesday's catastrophe

- Wednesday photosynthesis started, and lead to a lot of oxygen which killed a lot of beings just before midnight

- Tuesday bacteria show up

- Monday first forms of life show up

- Sunday morning, planet Earth forms, pretty much at the same time as the Sun.

- Our galaxy, the Milky Way, is about a week older

- The Universe is about another week older - about 22 days.

There are several things that surprised me here.

- That dinosaurs were around for such an incredibly long time. Dinosaurs were around for seven hours, and humans for a minute.

- That life started so quickly after Earth was formed, but then took so long to get to animals.

- That the Earth and the Sun started basically at the same time.

Addendum April 27: Álvaro Ortiz, a graphic designer from Madrid, turned this text into an infographic.

Illuminati and Wikibase

When I was a teenager I was far too much fascinated by the Illuminati. Much less about the actual historical order, and more about the memetic complex, the trilogy by Shea and Wilson, the card game by Steve Jackson, the secret society and esoteric knowledge, the Templar Story, Holy Blood of Jesus, the rule of 5, the secret of 23, all the literature and offsprings, etc etc...

Eventually I went to actual order meetings of the Rosicrucians, and learned about some of their "secret" teachings, and also read Eco's Foucault's Pendulum. That, and access to the Web and eventually Wikipedia, helped to "cure" me from this stuff: Wikipedia allowed me to put a lot of the bits and pieces into context, and the (fascinating) stories that people like Shea & Wilson or von Däniken or Baigent, Leigh & Lincoln tell, start falling apart. Eco's novel, by deconstructing the idea, helps to overcome it.

He probably doesn't remember it anymore, but it was Thomas Römer who, many years ago, told me that the trick of these authors is to tell ten implausible, but verifiable facts, and tie them together with one highly plausible, but made-up fact. The appeal of their stories is that all of it seems to check out (because back then it was hard to fact check stuff, so you would use your time to check the most implausible stuff).

I still understand the allure of these stories, and love to indulge in them from time to time. But it was the Web, and it was learning about knowledge representation, that clarified the view on the underlying facts, and when I tried to apply the methods I was learning to it, it fell apart quickly.

So it is rather fascinating to see that one of the largest and earliest applications of Wikibase, the software we developed for Wikidata, turned out to be actual bona fide historians (not the conspiracy theorists) using it to work on the Illuminati, to catalog the letters they sent to reach other, to visualize the flow of information through the order, etc. Thanks to Olaf Simons for heading this project, and for this write up of their current state.

It's amusing to see things go round and round and realize that, indeed, everything is connected.

Imagine there's a revolution...

... and no one is going to it.

This notion sometimes scares me when I think abou the semantic web. What if all this great ideas are just to complex to be implemented? What if it remains an ivory tower dream? But, on the other hand, how much pragmatism can we take without loosing the vision?

And then, again, I see the semantic web working already: it's del.icio.us, it's flickr, it's julie, and there's so much more to come. The big time of the semantic web is yet to come, and I think none of us can really imagine the impact it is going to have. But it will definitively be interesting!

Immortal relationships

I saw a beautiful meme yesterday that said that from the perspective of a cat or dog, humans are like elves who live for five hundred years and yet aren't afraid to bond with them for their whole life. And it is depicted as beautiful and wholesome.

It's so different from all those stories of immortals, think of Vampires or Highlander or the Sandman, where the immortals get bitter, or live in misery and loss, or become aloof and uncaring about human lives and their short life spans, and where it hurts them more than it does them good.

There seem to be more stories exploring the friendship of immortals with short-lived creatures, be it in Rings of Power with the relationship of Elrond and Durin, be it the relation of Star Trek's Zora with the crew of the Discovery or especially with Craft in the short movie Calypso, or between the Eternal Sersi and Dane Whitman. All these relations seem to be depicted more positively and less tragic.

In my opinion that's a good thing. It highlights the good parts in us that we should aspire to. It shows us what we can be, based in a very common perception, the relationship to our cats and dogs. Stories are magic, in it's truest sense. Stories have an influence on the world, they help us understand the world, imagine the impact we can have, explore us who we can be. That's why I'm happy to see these more positive takes on that trope compared to the tragic takes of the past.

(I don't know if any of this is true. I think it would require at least some work to actually capture instances of such stories, classify and tally them, to see if that really is the case. I'm not claiming I've done that groundwork, but just capture an observation that I'd like to be true, but can't really vouch for it.)

In the beginning

"Let there be a planet with a hothouse effect, so that they can see what happens, as a warning."

"That is rather subtle, God", said the Archangel.

"Well, let it be the planet closest to them. That should do it. They're intelligent after all."

"If you say so."

Introducing rdf2owlxml

Very thoughtful - I simply forgot to publish the last entry of this blog. Well, there you see it finally... but let's move to the new news.

Another KAON2 based tool - rdf2owlxml - just got finished, a converter to turn RDF/XML-serialisation of an OWL-ontology into an OWL/XML Presentation Syntax document. And it even works with the Wine-ontology.

So, whenever you need an ontology in the easy to read OWL/XML Presentation Syntax - for example, in order to XSL it further to a HTML-page representing your ontology, or anything like that, because it's hard to do this stuff with RDF/XML, go to rdf2owlxml and just grab the results! (The results work fine with dlpconvert as well, by the way).

Hope you like it, but be reminded - it is a very early service right now, only a 0.2 version.

Java developers f*** the least

Andrew Newman conducted a brilliant and significant study on how often programmers use f***, and he splitted it on programming languages. Java developers f*** the least, whereas LISP programmers use it on every fourth opportunity. In absolute term, there are still more Java f***s, but less than C++ f***s.

Just to add a further number to the study -- because Andrew unexplicably omitted Python -- here's the data: about 196,000 files / 200 occurences -> 980. That's the second highest result, placing it between Java and Perl (note that the higher the number, the less f***s -- I would have normalized that by taking it 1/n, but, fuck, there's always something to complain).

Note that Google Code Search actually is totally inconsisten with regards to their results. A search for f*** alone returns 600 results, but if you look for f*** in C++ it returns 2000. So, take the numbers with more than a grain of salt. The bad thing is that Google counts are taken as a basis for a growing number of algorithms in NLP and machine learning (I co-authored a paper that does that too). Did anyone compare the results with Yahoo counts or MSN counts or Ask counts or whatever? This is not the best scientific practice, I am afraid. And I comitted it too. Darn.

Job at the AIFB

Are you interested in the Semantic Web? (Well, probably yes or else you wouldn't read this). Do you want to work at the AIFB, the so called Semantic Web Machine? (It was Sean Bechhofer who gave us this name, at the ISWC 2005) Maybe this is your chance...

Well, if you ask me, this is the best place to work. The offices are nice, the colleagues are great, our impact is remarkable - oh well, it's loads of fun to work here, really.

We are looking for a person to work on KAON2 especially, which is a main building block of many a AIFB software, as for example my own OWL Tools, and some European Projects. Mind you, this is no easy job. But if you finished your Diploma, Master or your PhD, know a lot about efficient reasoning, and have quite some programming skills, peek at the official job offer (also available in German).

Do you dare?

Johnny Cash and Stalin

Johnny Cash was the first American to learn about Stalin's death.

At that time, Cash was a member of the Armed Forces and stationed in Germany. According to Cash, he was the one to intercept the Morse code message about Stalin's death before it was announced.

KAON2 OWL Tools V0.23

A few days ago I packaged the new release of the KAON2 OWL tools. And they moved from their old URL (which was pretty obscure: http://www.aifb.uni-karlsruhe.de/WBS/dvr/owltools ) to their new home on OntoWare: owltools.ontoware.org. Much nicer.

The OWL tools are a growing number of little tools that help people working with OWL. Besides the already existing tools, like count, filter or merge, partly enhanced, some new entered the scene: populate, that just populates an ontology randomly with instances (which may be used for testing later on) and screech, that creates a split program out of an ontology (you can find more information on OWL Screech' own website).

A very special little thing is the first beta implementation of shell. This will become a nice OWL shell that will allow to explore and edit OWL files. No, this is not meant as a competitor to full-fledged integrated ontology development environments like OntoStudio, Protégé or SWOOP, it's rather an alternative approach. And it's just started. I hope to have autocompletion implemented pretty soon, and some more commands. If anyone wants to join, give me a mail.

KAON2 and Protégé

KAON2 is the Karlsruhe Ontology infrastructure. It is an industry strength reasoner for OWL ontologies, pretty fast and comparable to reasoners like Fact and Racer, who gained from years of development. Since a few days KAON2 also implements the DIG Interface! Yeah, now you can use it with your tools! Go and grab KAON2 and get a feeling for how good it fulfills your needs.

Here's a step to step description of how you can use KAON2 with Protégé (other DIG based tools should be pretty the same). Get the KAON2 package, unpack it and then go to the folder with the kaon2.jar file in it. This is the Java library that does all the magic.

Be sure to have Java 5 installed and in your path. No, Java 1.4 won't do it, KAON2 builds heavily on some of the very nice Java 5 features.

You can start KAON2 now with the following command:

java -cp kaon2.jar org.semanticweb.kaon2.server.ServerMain -registry -rmi -ontologies server_root -dig -digport 8088

Quite lengthy, I know. You will probably want to stuff this into a shell-script or batch-file so you can start your KAON2 reasoner with a simple doubleclick.

The last argument - 8088 in our example - is the port of the DIG service. Fire up your Protege with the OWL plugin, and check in the OWL menu the preferences window. The reasoner URL will tell you where Protege looks for a reasoner - with the above DIG port it should be http://localhost:8088. If you chose another port, be sure to enter the correct address here.

Now you can use the consistency checks and automatic classification and all this as provided by Protege (or any other Ontology Engineering tool featuring the DIG interface). Protégé tells you also the time your reasoner took for its tasks - compare it with Racer and Fact, if you like. I'd be interested in your findings!

But don't forget - this is the very first release of the DIG interface. If you find any bugs, say so! They must be squeezed! And don't forget: KAON2 is quite different than your usual tableaux reasoner, and so some questions are simply not possible. But the restrictions shouldn't be too severe. If you want more information, go to the KAON2 web site and check the references.

Katherine Maher on The Truth

Wikipedia is about verifiable facts from reliable sources. For Wikipedia, arguing with "The Truth" is often not effective. Wikipedians don't argue "because it's true" but "because that's what's in this source".

It is painful and upsetting to see Katherine Maher so viciously and widely attacked on Twitter. Especially for a quote repeated out-of-context which restates one of the foundations of Wikipedia.

I have worked with Katherine. We were lucky to have her at Wikipedia, and NPR is lucky to have her now.

The quote - again, as said, taken out of the context that it stems from the way Wikipedia editors collaborate is: "Our reverence for the truth might be a distraction that's getting in the way of finding common ground and getting things done."

It is taken from this TED Talk by Katherine, which provides sufficient context for the quote.

Katherine Maher to step down from Wikimedia Foundation

Today Katherine Maher announced that she is stepping down as the CEO of the Wikimedia Foundation in April.

Thank you for everything!

Keynote at SMWCon Fall 2020

I have the honor of being the invited keynote for the SMWCon Fall 2020. I am going to talk "From Semantic MediaWiki to Abstract Wikipedia", discussing fifteen years of Semantic MediaWiki, how it all started, where we are now - crossing Freebase, DBpedia, Wikidata - and now leading to Wikifunctions and Abstract Wikipedia. But, more importantly, how Semantic MediaWiki, over all these years, still holds up and what its unique value is.

Page about the talk on the official conference site: https://www.semantic-mediawiki.org/wiki/SMWCon_Fall_2020/Keynote:_From_Semantic_Wikipedia_to_Abstract_Wikipedia

Keynote at Web Conference 2021

Today, I have the honor to give a keynote at the WWW Confe... sorry, the Web Conference 2021 in Ljubljana (and in the whole world). It's the 30th Web Conference!

Join Jure Leskovec, Evelyne Viegas, Marko Grobelnik, Stan Matwin and myself!

I am going to talk about how Abstract Wikipedia and Wikifunctions aims to contribute to Knowledge Equity. Register here for free:

Update: the talk can now be watched on VideoLectures:

Knowledge Graph Conference 2019, Day 1

On Tuesday, May 7, began the first Knowledge Graph Conference. Organized by François Scharffe and his colleagues at Columbia University, it was located in New York City. The conference goes for two days, and aims at a much more industry-oriented crowd than conferences such as ISWC. And it reflected very prominently in the speaker line-up: especially finance was very well represented (no surprise, with Wall Street being just downtown).

Speakers and participants from Goldman Sachs, Capital One, Wells Fargo, Mastercard, Bank of America, and others were in the room, but also from companies in other industries, such as Astra Zeneca, Amazon, Uber, or AirBnB. The speakers and participants were rather open about their work, often listing numbers of triples and entities (which really is a weird metric to cite, but since it is readily available it is often expected to be stated), and these were usually in the billions. More interesting than the sheer size of their respective KGs were their use cases, and particularly in finance it was often ensuring compliance to insider trading rules and similar regulations.

I presented Wikidata and the idea of an Abstract Wikipedia as going beyond what a Knowledge Graph can easily express. I had the feeling the presentation was well received - it was obvious that many people in the audience were already fully aware of Wikidata and are actively using it or planning to use it. For others, particularly the SPARQL endpoint with its powerful visualization capabilities and the federated queries, and the external identifiers in Wikidata, and the approach to references for the claims in Wikidata were perceived as highlights. The proposal of an Abstract Wikipedia was very warmly received, and it was the first time no one called it out as a crazy idea. I guess the audience was very friendly, despite New York's reputation.

A second set of speakers were offering technologies and services - and I guess I belong to this second set by speaking about Wikidata - and among them were people like Juan Sequeda of Capsenta, who gave an extremely engaging and well-substantiated talk on how to bridge the chasm towards more KG adoption; Pierre Haren of Causality Link, who offered an interesting personal history through KR land from LISP to Causal Graphs; Dieter Fensel of OnLim, who had a a number of really good points on the relation between intelligent assistants and their dialogue systems and KGs; Neo4J, Eccenca, Diffbot.

A highlight for me was the astute and frequent observation by a number of the speakers from the first set that the most challenging problems with Knowledge Graphs were rarely technical. I guess graph serving systems and cloud infrastructure have improved so much that we don't have to worry about these parts anymore unless you are doing crazy big graphs. The most frequently mentioned problems were social and organizational. Since Knowledge Graphs often pulled data sources from many different parts of an organization together, with a common semantics, they trigger feelings of territoriality. Who gets to define the common ontology? What if the data a team provides has problems or is used carelessly, who's at fault? What if others benefit from our data more than we did even though we put all the effort in to clean it up? How do we get recognized for our work? Organizational questions were often about a lack of understanding, especially among engineers, for fundamental Knowledge Graph principles, and a lack of enthusiasm in the management chain - especially when the costs are being estimated and the social problems mentioned before become apparent. One particularly visible moment was when Bethany Sehon from Capital One was asked about the major challenges to standardizing vocabularies - and her first answer was basically "egos".

All speakers talked about the huge benefits they reaped from using Knowledge Graphs (such as detecting likely cliques of potential insider trading that later indeed got convicted) - but then again, this is to be expected since conference participation is self-selecting, and we wouldn't hear of failures in such a setting.

I had a great day at the inaugural Knowledge Graph Conference, and am sad that I have to miss the second day. Thanks to François Scharffe for organizing the conference, and thanks to the sponsors, OntoText, Collibra, and TigerGraph.

For more, see:

Knowledge Graph Technology and Applications 2019

Last week, on May 13, the Knowledge Graph Technology and Applications workshop happened, co-located with the Web Conference 2019 (formerly known as WWW), in San Francisco. I was invited to give the opening talk, and talked about the limits of Knowledge Graph technologies when trying to express knowledge. The talk resonated well.

Just like in last week's KGC, the breadth of KG users is impressive: NASA uses KGs to support air traffic management, Uber talks about the potential for their massive virtual KG over 200,000 schemas, LinkedIn, Alibaba, IBM, Genentech, etc. I found particularly interesting that Microsoft has not one, but at least four large Knowledge Graphs: the generic Knowledge Graph Satori; an Academic Graph for science, papers, citations; the Enterprise Graph (mostly LinkedIn), with companies, positions, schools, employees and executives; and the Work graph about documents, conference rooms, meetings, etc. All in all, they boasted more than a trillion triples (why is it not a single graph? No idea).

Unlike last week, the focus was less on sharing experiences when working with Knowledge Graphs, but more on academic work, such as query answering, mixing embeddings with KGs, scaling, mapping ontologies, etc. Given that it is co-located with the Web Conference, this seems unsurprising.

One interesting point that was raised was the question of common sense: can we, and how can we use a knowledge graph to represent common sense? How can we say that a box of chocolate may fit in the trunk of a car, but a piano would not? Are KGs the right representation for that? The question remained unanswered, but lingered through the panel and some QnA sessions.

The workshop was very well visited - it got the second largest room of the day, and the room didn’t feel empty, but I have a hard time estimating how many people where there (about 100-150?). The audience was engaged.

The connection with the Web was often rather tenuous, unless one thinks of KGs as inherently associated with the Web (maybe because they often could use Semantic Web standards? But also often they don’t). On the other side it is a good outlet within the Web Conference for the Semantic Web crowd and to make them mingle more with the KG crowd, I did see a few people brought together into a room that often have been separated, and I was able to point a few academic researchers to enterprise employees that would benefit from each other.

Thanks to Ying Ding from the Indiana University and the other organizers for organizing the workshop, and for all the discussion and insights it generated!

Update: corrected that Uber talked about the potential of their knowledge graph, not about their realized knowledge graph. Thanks to Joshua Shivanier for the correction! Also added a paragraph on common sense.

Languages with the best lexicographic data coverage in Wikidata 2023

Languages with the best coverage as of the end of 2023

- English 92.9%

- Spanish 91.3%

- Bokmal 89.1%

- Swedish 88.9%

- French 86.9%

- Danish 86.9%

- Latin 85.8%

- Italian 82.9%

- Estonian 81.2%

- Nynorsk 80.2%

- German 79.5%

- Basque 75.9%

- Portuguese 74.8%

- Malay 73.1%

- Panjabi 71.0%

- Slovak 67.8%

- Breton 67.3%

What does the coverage mean? Given a text (usually Wikipedia in that language, but in some cases a corpus from the Leipzig Corpora Collection), how many of the occurrences in that text are already represented as forms in Wikidata's lexicographic data.

The list contains all languages where the data covers more than two thirds of the selected corpus.

Last Unicorn dreamcast

One thing my sister and I were doing from time to time was to think, if the Last Unicorn was remade as a live action movie, who would we cast in the different roles? It is sad to see how many of our dreamcast have since died: our favorite Prince Lir was Heath Ledger, and King Haggard, unsurprisingly, Christopher Lee.

Since I was reading the book with Little One, and now watched the movie again (for the 120th time or so), I was again going through my current dreamcast. Here we go.

- Lady Amalthea - Anna Taylor-Joy

- Schmendrick - Zach Braff, Donald Glover

- Molly Grue - Mia Farrow

- Prince Lir - Owen Wilson, no, getting too old; Liam Hemsworth? Chloe Moretz would be an interesting choice

- King Haggard - Anthony Hopkins, Jack Nicholson

Since it is en vogue, I also asked Bard and ChatGPT. Here's Bard:

- Jeff Bridges as Schmendrick the Magician

- Mia Farrow as Molly Grue

- Angela Lansbury as the Unicorn

- Daryl Hannah as Filidor

- Jeff Goldblum as Schmendrick's sidekick, Bungle

- Peter Dinklage as the Red Bull

- Uma Thurman as Mommy Fortuna

- Alan Rickman as King Haggard

OK, Alan Rickman is a great choice, but unfortunately dead. Uma Thurman as Mommy Fortuna is weird. Peter Dinklage as the Red Bull... why does the Red Bull need to be cast at all? Who are Bungle and Filidor? Angela Lansbury as the Unicorn / Lady Amalthea is... not the right choice. Also dead. Mia Farrow and Jeff Bridges are too obvious choices given their roles in the cast of the animation movie, also Angela Lansbury is from the original cast. I would give Bard a D for that answer.

ChatGPT says:

- Unicorn - Saoirse Ronan or Emma Watson

- Schmendrick - Timothee Chalamet or Eddie Redmayne

- Molly Grue - Meryl Streep or Helen Mirren

- King Haggard - Ian McKellen or Christopher Lee (if he were still alive)

- Prince Lir - Tom Holland or Ansel Elgort

- Mommy Fortuna - Tilda Swinton or Cate Blanchett

Not even close to my choice, but heck, those are good choices. Molly Grue and Mommy Fortuna seem both a bit over-cast. Prince Lir and Schmendrick are pretty brilliant choices. I am not particularly impressed by Emma Watson for Lady Amalthea, I could see Saoirse Ronan work.

I'd give a B+ to ChatGPT.

I feel like ChatGPT seems to go for actors and actresses that are popular with the crowd who likes The Last Unicorn? Not the worst choice.

I followed up with Bard to see who Bungle and Filidor are, because they don't appear neither in the book nor in the movie, if I remember correctly. So, "Bungle is a red unicorn who is Schmendrick's sidekick in the novel The Last Unicorn. He is a clumsy and incompetent unicorn, but he is also a loyal and brave friend. Bungle is played by Jeff Goldblum in the 1982 animated film adaptation of the novel." And "Filidor is a bard in the novel The Last Unicorn. He is a wandering minstrel who travels the land singing songs and telling stories. Filidor is a wise and kind-hearted man, and he is always willing to help those in need. He is played by Daryl Hannah in the 1982 animated film adaptation of the novel." That's entirely a fabrication.

Lexical masks in JSON

We have released lexical masks as ShEx files before, schemata for lexicographic forms that can be used to validate whether the data is complete.

We saw that it was quite challenging to turn these ShEx files into forms for entering the data, such as Lucas Werkmeister’s Lexeme Forms. So we adapted our approach slightly to publish JSON files that keep the structures in an easier to parse and understand format, and to also provide a script that translates these JSON files into ShEx Entity Schemas.

Furthermore, we published more masks for more languages and parts of speech than before.

Full documentation can be found on wiki: https://www.wikidata.org/wiki/Wikidata:Lexical_Masks#Paper

Background can be found in the paper: https://www.aclweb.org/anthology/2020.lrec-1.372/

Thanks Bruno, Saran, and Daniel for your great work!

Libertarian cities

I usually try to contain my "Schadenfreude", but reading this article made it really difficult to do so. It starts with the story of Rio Verde Foothills and its lack of water supply after it was intentionally built to circumvent zoning regulations regarding water supply, and lists a few other examples, such as

- "Grafton, New Hampshire. It’s a tiny town that was taken over by libertarians who moved there en masse to create their vision of heaven on earth. They voted themselves into power, slashed taxes and cut the town’s already minuscule budget to the bone. Journalist Matthew Hongoltz-Hetling recounts what happened next:

- 'Grafton was a poor town to begin with, but with tax revenue dropping even as its population expanded, things got steadily worse. Potholes multiplied, domestic disputes proliferated, violent crime spiked, and town workers started going without heat. ...'

- Then the town was taken over by bears."

The article is worth reading:

The Wikipedia article is even more damning:

- "Grafton is an active hub for Libertarians as part of the Free Town Project, an offshoot of the Free State Project. Grafton's appeal as a favorable destination was due to its absence of zoning laws and a very low property tax rate. Grafton was the focus of a movement begun by members of the Free State Project that sought to encourage libertarians to move to the town. After a rash of lawsuits from Free Towners, an influx of sex offenders, an increase of crime, problems with bold local bears, and the first murders in the town's history, the Libertarian project ended in 2016."

Lion King 2019

Wow. The new version of the Lion King is technically brilliant, and story-wise mostly unnecessary (but see below for an exception). It is a mostly beat-for-beat retelling of the 1994 animated version. The graphics are breathtaking, and they show how far computer-generated imagery has come. For a measly million dollar per minute of film you can get a photorealistic animal movies. Because of the photorealism, it also loses some of the charm and the emotions that the animated version carried - in the original the animals were much more anthropomorphic, and the dancing was much more exaggerated, which the new version gave up. This is most noticeable in the song scene for "I can't wait to be king", which used to be a psychedelic, color shifted sequence with elephants and tapirs and giraffes stacked upon each other, replaced by a much more realistic sequence full of animals and fast cuts that simply looks amazing (I never was a big fan of the psychedelic music scenes that were so frequent in many animated movies, so I consider this a clear win).

I want to focus on the main change, and it is about Scar. I know the 1994 movie by heart, and Scar is its iconic villain, one of the villains that formed my understanding of a great villain. So why would the largest change be about Scar, changing him profoundly for this movie? How risky a choice in a movie that partly recreates whole sequences shot by shot?

There was one major criticism about Scar, and that is that he played with stereotypical tropes of gay grumpy men, frustrated, denied, uninterested in what the world is offering him, unable to take what he wants, effeminate, full of cliches.

That Scar is gone, replaced by a much more physically threatening scar, one that whose philosophy in life is that the strongest should take what they want. Chiwetel Ejiofor's voice for Scar is scary, threatening, strong, dominant, menacing. I am sure that some people won't like him, as the original Scar was also a brilliant villain, but this leads immediately to my big criticism of the original movie: if Scar was only half as effing intelligent as shown, why did he do such a miserable job in leading the Pride Lands? If he was so much smarter than Mufasa, why did the thriving Pride Lands turn into a wasteland, threatening the subsistence of Scar and his allies?

The answer in the original movie is clear: it's the absolutist identification of country and ruler. Mufasa was good, therefore the Pride Lands were doing well. When Scar takes over, they become a wasteland. When Simba takes over, in the next few shots, they start blooming again. Good people, good intentions, good outcomes. As simple as that.

The new movie changes that profoundly - and in a very smart way. The storytellers at Disney really know what they're doing! Instead of following the simple equation given above, they make it an explicit philosophical choice in leadership. This time around, the whole Circle of Life thing, is not just an Act One lesson, but is the major difference between Mufasa and Scar. Mufasa describes a great king as searching for what they can give. Scar is about might is right, and about the strongest taking whatever they want. This is why he overhunts and allows overhunting. This is why the Pride Lands become a wasteland. Now the decline of the Pride Lands make sense, and also why the return of Simba and his different style as a king would make a difference. The Circle of Life now became important for the whole movie, at the same time tying with the reinterpretation of Scar, and also explaining the difference in outcome.

You can probably tell, but I am quite amazed at this feat in storytelling. They took a beloved story and managed to improve it.

Unfortunately, the new Scar also means that the song Be Prepared doesn't really work as it used to, and thus the song also got shortened and very much changed in a movie that became much longer otherwise. I am not surprised, they even wanted to remove it, and now I understand why (even though back then I grumbled about it). They also removed the Leni Riefenstahl imaginary from the new version which was there in the original one, which I find regrettable, but obviously necessary given the rest of the movie.

A few minor notes.

The voice acting was a mixed bag. Beyonce was surprisingly bland (speaking, her singing was beautiful), and so was John Oliver (singing, his speaking was perfect). I just listened again to I can't wait to be king, and John Oliver just sounds so much less emotional than Rowan Atkinson. Pity.

Another beautiful scene was the scene were Rafiki receives the massage that Simba is still alive. In the original, this was a short transition of Simba ruffling up some flowers, and the wind takes them to Rafiki, he smells them, and realizes it is Simba. Now the scene is much more elaborate, funnier, and is reminiscent of Walt Disney's animal movies, which is a beautiful nod to the company founder. Simba's hair travels with the wind, birds, a Giraffe, an ant, and more, until it finally reaches the Shaman's home.

One of my best laughs was also due to another smart change: in Hakuna Matata, when they retell Pumbaa's story (with an incredibly cute little baby Pumbaa), Pumbaa laments that all his friends leaving him got him "unhearted, every time that he farted", and immediately complaining to Timon as to why he didn't stop him singing it - a play on the original's joke, where Timon interjects Pumbaa before he finishes the line with "Pumbaa! Not in front of the kids.", looking right at the camera and breaking the fourth wall.

Another great change was to give the Hyenas a bit more character - the interactions between the Hyena who wasn't much into personal space and the other who rather was, were really amusing. Unlike with the original version the differences in the looks of the Hyenas are harder to make out, and so giving them more personality is a great choice.

All in all, I really loved this version. Seeing it on the big screen pays off for the amazing imagery that really shines on a large canvas. I also love the original, and the original will always have a special place in my heart, but this is a wonderful tribute to a brilliant movie with an exceptional story.

Little One's first GIF

Little One made her first GIF!

Little Richard and James Brown

When Little Richard started becoming more famous, he already had signed up for a number of gigs but was then getting much better opportunities coming in. He was worried about his reputation, so he did not want to cancel the previous agreed gigs, but also did not want to miss the new opportunities. Instead he sent a different singer who was introduced as Little Richard, because most concert goers back then did not know how Little Richard exactly looked like.

The stand-in was James Brown, who at this point was unknown, and who later had a huge career, becoming an inaugural inductee to the Rock and Roll Hall of Fame - two years before Little Richard.

(I am learning a lot from and am enjoying Andrew Hickey's brilliant podcast "A History of Rock and Roll in 500 Songs")

Live from ICAIL

"Your work remindes me a lot of abduction, but I can't find you mention it in the paper..."

"Well, it's actually in the title."

Long John and Average Joe

You may know about Long John Silver. But who's the longest John? Here's the answer according to Wikidata: https://w.wiki/4dFL

What about your Average Joe? Here's the answer about the most average Joe, based on all the Joes in Wikidata: https://w.wiki/4dFR

Note, the average height of a Joe in Wikidata is 1,86cm or 6'1", which is quite a bit higher than the average height in the population. A data collection and coverage issue: it is much more likely to have the height for a basketball player than for an author in Wikidata.

Just two silly queries for Wikidata, which are nice ways to show off the data set and what one can do with the SPARQL query endpoint. Especially the latter one shows off a rather interesting and complex SPARQL query.

Machine Learning and Metrology

There are many, many papers in machine learning these days. And this paper, taking a step back, and thinking about how researchers measure their results and how good a specific type of benchmarks even can be - crowdsourced golden sets. It brings a convincing example based on word similarity, using terminology and concepts from metrology, to show how many results that have been reported are actually not supported by the golden set, because the resolution of the golden set is actually insufficient. So there might be no improvement at all, and that new architecture might just be noise.

I think this paper is really worth the time of people in the research field. Written by Chris Welty, Lora Aroyo, and Praveen Paritosh.

Mail problems

The last two days my mail account had trouble. If you could not send something to me, sorry! Now it should work again.

Since it is hard to guess who tried to eMail me in the last two days (I guess three persons right), I hope to reach some this way.

Major bill for US National Parks passed

Good news: the US Senate has passed a bipartisan large Public Lands Bill, which will provide billions right now and continued sustained funding for National Parks.

There a number of interesting and good parts about this, besides the obvious that National Parks are being funded better and predictably:

- the main reason why this passed and was made was that the Evangelical movement in the US is increasingly reckoning that Pro-Life also means Pro-Environment, and this really helped with making this bill a reality. This is major as it could set the US on a path to become a more sane nation regarding environmental policies. If this could also extend to global warming, that would be wonderful, but let's for now be thankful for any momentum in this direction.

- the sustained funding comes from oil and gas operations, which has a certain satisfying irony to it. I expect this part to backfire a bit somehow, but I don't know how yet.

- Even though this is a political move by Republicans in order to safe two of their Senators this fall, many Democrats supported it because the substance of the bill is good. Let's build on this momentum of bipartisanship.

- This has nothing to do with the pandemic, for once, but was in work for a long time. So all of the reasons above are true even without the pandemic.

Map of current Wikidata edits

It starts entirely black and then listens to Wikidata edits. Every time an item with a coordinate is edited, a blue dot in the corresponding place is made. So slowly, over time, you get a more and more complete map of Wikidata items.

If you open the developer console, you can get links and names of the items being displayed.

The whole page is less than a hundred lines of JavaScript and HTML, and it runs entirely in the browser. It uses the Wikimedia Stream API and the Wikidata API, and has no code dependencies. Might be fun to take a look if you're so inclined.

https://github.com/vrandezo/wikidata-edit-map/blob/main/index.html

Markus Krötzsch ISWC 2022 keynote

A brilliant keynote by Markus Krötzsch for this year's ISWC.

"The era of standard semantics has ended"

Yes, yes! 100%! That idea was in the air for a long time, but Markus really captured it in clear and precise language.

This talk is a great birthday present for Wikidata's ten year anniversary tomorrow. The Wikidata community had over the last years defined numerous little pockets of semantics for various use cases, shared SPARQL queries to capture some of those, identified constraints and reasoning patterns and shared those. And Wikidata connecting to thousands of external knowledge bases and authorities, each with their own constraints - only feasible since we can, in a much more fine grained way, use the semantics we need for a given context. The same's true for the billions of Schema.org triples out there, and how they can be brought together.

The middle part of the talk goes into theory, but make sure to listen to the passionate summary at 59:40, where he emphasises shared understanding, that knowledge is human, and the importance of community.

"Why have people ever started to share ontologies? What made people collaborate in this way?" Because knowledge is human. Because knowledge is often more valuable when it is shared. The data available on the Web of linked data, including Wikidata, Data Commons, Schema.org, can be used in many, many ways. It provides a common foundation of knowledge that enables many things. We are far away from using it to its potential.

A remark on triples, because I am still thinking too much about them: yes to Markus's comments: "The world is not triples, but we make it triples. We break down the world into triples, but we don't know how to rebuild it. What people model should follow the technical format is wrong, it should be the other way around" (rough quotes)

At 1:17:56, Markus calls back our discussions of the Wikidata data model in 2012. I remember how he was strongly advocating for more standard semantics (as he says), and I was pushing for more flexible knowledge representations. It's great to see the synthesis in this talk.

May 2019 talks

I am honored to give the following three invited talks in the next few weeks:

- Knowledge Graph Conference, Columbia University, New York, May 7, 2019

- Workshop on Knowledge Graph Technology and Applications, co-located with The Web Conference in San Francisco, May 13, 2019

- Wiki Workshop 2019, co-located with The Web Conference in San Francisco, May 14, 2019

The topics will all be on Wikidata, how the Wikipedias use it, and the Abstract Wikipedia idea.

Maybe the hottest conference ever

The Wikipedia Hacking Days are over. We have been visiting Siggraph, we had a tour through the MIT Media Lab, some of the people around were Brion Vibber (Wikimedia's CTO), Ward Cunningham (the guy who invented wikis), Dan Bricklin (the guy who invented spreadsheets), Aaron Swartz (a web wunderkind, he wrote the RSS specs at 14), Jimbo Wales (the guy who made Wikipedia happen), and many other people. We have been working at the One Laptop per Child offices, the office to easily the coolest project of the world.

During our stay at the Hacking Days, we had the chance to meet up with the local IBM Semantic Web dev staff and Elias Torres, who showed us the fabulous work they are doing right now on the Semantic Web technology stack (never before rapid application deployment was so rapid). And we also met up with the Simile project people, where we talked about connecting their stuff like Longwell and Timeline to the Semantic MediaWiki. We actually tried Timeline out on the ISWC2006 conference page, and the RDF worked out of the box, giving us a timeline of the workshop deadlines. Yay!

Today started Wikimania2006 at the Harvard Law School. was not only a keynote by Lawrence Lessig, as great as expected, but also our panel on the Semantic Wikipedia. We had an unexpected guest (who didn't get introduced, so most people didn't even realize he was there), Tim Berners-Lee, probably still jetlagged from a trip to Malaysia. The session was received well, and Brion said, that he sees us on the way of getting the extension into Wikipedia proper. Way cool. And we got bug reports from Sir Timbl again.

And there are still two days to go. If you're around and like to meet, drop a note.

Trust me — it all sounds like a dream to me.

Meat Loaf

"But it was long ago

And it was far away

Oh God, it seemed so very far

And if life is just a highway

Then the soul is just a car

And objects in the rear view mirror may appear closer than they are."

Bat out of Hell II: Back into Hell was the first album I really listened to, over and over again. Where I translated the songs to better understand them. Paradise by the Dashboard Light is just a fun song. He was in cult classic movies such as The Rocky Horror Picture Show, Fight Club, and Wayne's World.

Many of the words we should remember him for are by Jim Steinman, who died last year and wrote many of the lyrics that became famous as Meat Loaf's songs. Some of Meat Loaf's own words better not be remembered.

Rock in Peace, Meat Loaf! You have arrived at your destination.

Meeting opportunities

I read in an interview in Focus (German) with Andreas Weigend, he says that publishing his travel arrangements in his blog helped him meet interesting people and allow for unexpected opportunities. I actually noticed the same thing when I wrote about coming to Wikimania this summer. And those were great meetings!

So, now, here are the places I will be in the next weeks.

- Oct 18-Oct 20, Madrid: SEKT meeting

- Oct 22-Oct 26, Milton Keynes (passing through London): Talk at KMi Podium, Open University, on Semantic MediaWiki. There's a webcast! Subscribe, if you like.

- Oct 30-Nov 3, Montpellier: ODBASE, and especially OntoContent. Having a talk there on Unit testing for ontologies.

- Nov 5-Nov 13, Athens, Georgia: ISWC and OWLED

- Nov 15-Nov 17, Ipswich: SEKT meeting

- Nov 27-Dec 1, Vienna: Keynote at Semantics on Semantic Wikipedia

- Dec 13-17, Ljubljana: SEKT meeting

- Dec 30-Jan 10, Mumbai and Pune: the travel is private, but this doesn't mean at all we may not meet for work if you're around that part of the world

Just mail me if you'd like to meet.